WHAT IS COMPUTER ?

- DERIVED FROM LATIN LANGUAGE

- WORD - COMPUTE

- TO CALCULATE”

- COMPUTER

The ABACUS was an early aid for mathematical computations. Its only value is that it aids the memory of the human performing the calculation. A skilled abacus operator can work on addition and subtraction problems at the speed of a person equipped with a hand calculator (multiplication and division are slower). The abacus is often wrongly attributed to China. In fact, the oldest surviving abacus was used in 300 B.C. by the Babylonians. The abacus is still in use today, principally in the far east. A modern abacus consists of rings that slide over rods, but the older one pictured below dates from the time when pebbles were used for counting (the word "calculus" comes from the Latin word for pebble.

A very old abacus:

An original set of Napier's Bones [photo courtesy IBM.

A more modern abacus. Note how the abacus is really just a representation of the human fingers: the 5 lower rings on each rod represent the 5 fingers and the 2 upper rings represent the 2 hands.

In 1617 an eccentric (some say mad) Scotsman named John Napier invented logarithms, which are a technology that allows multiplication to be performed via addition. The magic ingredient is the logarithm of each operand, which was originally obtained from a printed table. But Napier also invented an alternative to tables, where the logarithm values were carved on ivory sticks which are now called Napier's Bones.

A more modern set of Napier's Bones:

Napier's invention led directly to the slide rule, first built in England in 1632 and still in use in the 1960's by the NASA engineers of the Mercury, Gemini, and Apollo programs which landed men on the moon.

A slide rule:

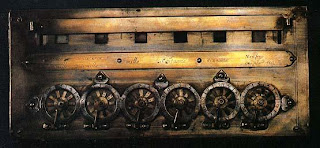

The first gear-driven calculating machine to actually be built was probably the calculating clock, so named by its inventor, the German professor Wilhelm Schickard in 1623. This device got little publicity because Schickard died soon afterward in the bubonic plague. In 1642 Blaise Pascal, at age 19, invented the Pascaline as an aid for his father who was a tax collector. Pascal built 50 of this gear-driven one-function calculator (it could only add) but couldn't sell many because of their exorbitant cost and because they really weren't that accurate (at that time it was not possible to fabricate gears with the required precision). Up until the present age when car dashboards went digital, the odometer portion of a car's speedometer used the very same mechanism as the Pascaline to increment the next wheel after each full revolution of the prior wheel. Pascal was a child prodigy. At the age of 12, he was discovered doing his version of Euclid's thirty-second proposition on the kitchen floor. Pascal went on to invent probability theory, the hydraulic press, and the syringe. Shown below is an 8 digit version of the Pascaline, and two views of a 6 digit version:

The first gear-driven calculating machine to actually be built was probably the calculating clock, so named by its inventor, the German professor Wilhelm Schickard in 1623. This device got little publicity because Schickard died soon afterward in the bubonic plague. In 1642 Blaise Pascal, at age 19, invented the Pascaline as an aid for his father who was a tax collector. Pascal built 50 of this gear-driven one-function calculator (it could only add) but couldn't sell many because of their exorbitant cost and because they really weren't that accurate (at that time it was not possible to fabricate gears with the required precision). Up until the present age when car dashboards went digital, the odometer portion of a car's speedometer used the very same mechanism as the Pascaline to increment the next wheel after each full revolution of the prior wheel. Pascal was a child prodigy. At the age of 12, he was discovered doing his version of Euclid's thirty-second proposition on the kitchen floor. Pascal went on to invent probability theory, the hydraulic press, and the syringe. Shown below is an 8 digit version of the Pascaline, and two views of a 6 digit version: Pascal's Pascaline:

A 6 digit model for those who couldn't afford the 8 digit model:

Jacquard's Loom showing the threads and the punched cards:

Jacquard's technology was a real boon to mill owners, but put many loom operators out of work. Angry mobs smashed Jacquard looms and once attacked Jacquard himself. History is full of examples of labor unrest following technological innovation yet most studies show that, overall, technology has actually increased the number of jobs.

A close-up of a Jacquard card:

Two types of computer punch cards:

One of the earliest attempts to build an all-electronic (that is, no gears, cams, belts, shafts, etc.) digital computer occurred in 1937 by J. V. Atanasoff, a professor of physics and mathematics at Iowa State University. By 1941 he and his graduate student, Clifford Berry, had succeeded in building a machine that could solve 29 simultaneous equations with 29 unknowns. This machine was the first to store data as a charge on a capacitor, which is how today's computers store information in their main memory (DRAM or dynamic RAM,

A 6 digit model for those who couldn't afford the 8 digit model:

Jacquard's Loom showing the threads and the punched cards:

Jacquard's technology was a real boon to mill owners, but put many loom operators out of work. Angry mobs smashed Jacquard looms and once attacked Jacquard himself. History is full of examples of labor unrest following technological innovation yet most studies show that, overall, technology has actually increased the number of jobs.

A close-up of a Jacquard card:

By 1822 the English mathematician Charles Babbage was proposing a steam driven calculating machine the size of a room, which he called the Difference Engine. This machine would be able to compute tables of numbers, such as logarithm tables. He obtained government funding for this project due to the importance of numeric tables in ocean navigation. By promoting their commercial and military navies, the British government had managed to become the earth's greatest empire. But in that time frame the British government was publishing a seven volume set of navigation tables which came with a companion volume of corrections which showed that the set had over 1000 numerical errors. It was hoped that Babbage's machine could eliminate errors in these types of tables. But construction of Babbage's Difference Engine proved exceedingly difficult and the project soon became the most expensive government funded project up to that point in English history.

Ten years later the device was still nowhere near complete, acrimony abounded between all involved, and funding dried up. The device was never finished.

A small section of the type of mechanism employed in Babbage's Difference Engine:

After that Charles Babbage design Analytic Engine . This device large as a house and powered by 6 steam engines, would be more general purpose in nature because it would be programmable, thanks to the punched card technology of Jacquard. Furthermore, Babbage realized that punched paper could be employed as a storage mechanism, holding computed numbers for future reference. Because of the connection to the Jacquard loom, Babbage called the two main parts of his Analytic Engine the "Store" and the "Mill", as both terms are used in the weaving industry. The Store was where numbers were held and the Mill was where they were "woven" into new results. In a modern computer these same parts are called the MEMORY UNIT and the CENTRAL PROCESSING UNIT (CPU).

The Analytic Engine also had a key function that distinguishes computers from calculators: the conditional statement. A conditional statement allows a program to achieve different results each time it is run. Based on the conditional statement, the path of the program (that is, what statements are executed next) can be determined based upon a condition or situation that is detected at the very moment the program is running.

Ada Byron, the daughter of the famous poet Lord Byron Though she was only 19, she was fascinated by Babbage's ideas and thru letters and meetings with Babbage she learned enough about the design of the Analytic Engine to begin fashioning programs for the still unbuilt machine. While Babbage refused to publish his knowledge for another 30 years, Ada wrote a series of "Notes" wherein she detailed sequences of instructions she had prepared for the Analytic Engine. The Analytic Engine remained unbuilt (the British government refused to get involved with this one) but Ada earned her spot in history as the first computer programmer. Ada invented the subroutine and was the first to recognize the importance of looping. Babbage himself went on to invent the modern postal system, cowcatchers on trains, and the ophthalmoscope, which is still used today to treat the eye.

An operator working at a Hollerith Desk like the one below:

Hollerith's invention, known as the Hollerith desk, consisted of a card reader which sensed the holes in the cards, a gear driven mechanism which could count (using Pascal's mechanism which we still see in car odometers), and a large wall of dial indicators (a car speedometer is a dial indicator) to display the results of the count.

Hollerith's technique was successful and the 1890 census was completed in only 3 years at a savings of 5 million dollars.

Interesting aside: the reason that a person who removes inappropriate content from a book or movie is called a censor,

as is a person who conducts a census, is that in Roman society the public official called the "censor" had both of these jobs..

Hollerith built a company, the Tabulating Machine Company which, after a few buyouts, eventually became INTERNATIONAL BUSINESS MACHINES, known today as IBM. IBM grew rapidly and punched cards became ubiquitous. Your gas bill would arrive each month with a punch card you had to return with your payment. IBM continued to develop mechanical calculators for sale to businesses to help with financial accounting and inventory accounting. But the U.S. military desired a mechanical calculator more optimized for scientific computation.

Two types of computer punch cards:

One early success was the Harvard Mark I computer which was built as a partnership between Harvard and IBM in 1944. This was the first programmable digital computer made in the U.S. But it was not a purely electronic computer.

Here's a close-up of one of the Mark I's four paper tape readers. A paper tape was an improvement over a box of punched cards as anyone who has ever dropped -- and thus shuffled -- his "stack" knows.

One of the four paper tape readers on the Harvard Mark I (you can observe the punched paper roll emerging from the bottom.

One of the primary programmers for the Mark I was a woman, Grace Hopper. Hopper found the first computer "bug": a dead moth that had gotten into the Mark I and whose wings were blocking the reading of the holes in the paper tape. The word "bug" had been used to describe a defect since at least 1889 but Hopper is credited with coining the word "debugging" to describe the work to eliminate program faults.

The first computer bug:

In 1953 Grace Hopper invented the first high-level language, "Flow-matic". This language eventually became COBOL which was the language most affected by the infamous Y2K problem. A high-level language is designed to be more understandable by humans than is the binary language understood by the computing machinery. A high-level language is worthless without a program -- known as a compiler -- to translate it into the binary language of the computer and hence Grace Hopper also constructed the world's first compiler. Grace remained active as a Rear Admiral in the Navy Reserves until she was 79 On a humorous note, the principal designer of the Mark I, Howard Aiken of Harvard, estimated in 1947 that six electronic digital computers would be sufficient to satisfy the computing needs of the entire United States. IBM had commissioned this study to determine whether it should bother developing this new invention into one of its standard products (up until then computers were one-of-a-kind items built by special arrangement). Aiken's prediction wasn't actually so bad as there were very few institutions (principally, the government and military) that could afford the cost of what was called a computer in 1947. He just didn't foresee the micro-electronics revolution which would allow something like an IBM Stretch computer of 1959:

(that's just the operator's console, here's the rest of its 33 foot length:)

The Atanasoff-Berry Computer:

One of the earliest attempts to build an all-electronic (that is, no gears, cams, belts, shafts, etc.) digital computer occurred in 1937 by J. V. Atanasoff, a professor of physics and mathematics at Iowa State University. By 1941 he and his graduate student, Clifford Berry, had succeeded in building a machine that could solve 29 simultaneous equations with 29 unknowns. This machine was the first to store data as a charge on a capacitor, which is how today's computers store information in their main memory (DRAM or dynamic RAM,

The IBM Stretch computer of 1959 needed its 33 foot length to hold the 150,000 transistors it contained. These transistors were tremendously smaller than the vacuum tubes they replaced, but they were still individual elements requiring individual assembly. By the early 1980s this many transistors could be simultaneously fabricated on an integrated circuit. Today's Pentium 4 microprocessor contains 42,000,000 transistors in this same thumbnail sized piece of silicon. t's humorous to remember that in between the Stretch machine (which would be called a mainframe today) and the Apple I (a desktop computer) there was an entire industry segment referred to as mini-computers such as the following PDP-12 computer of 1969:

The Teletype was the standard mechanism used to interact with a time-sharing computer:

The original IBM Personal Computer (PC)

This transformation was a result of the invention of the microprocessor. A microprocessor (uP) is a computer that is fabricated on an integrated circuit (IC). Computers had been around for 20 years before the first microprocessor was developed at Intel in 1971. The micro in the name microprocessor refers to the physical size. Intel didn't invent the electronic computer. But they were the first to succeed in cramming an entire computer on a single chip (IC). Intel was started in 1968 and initially produced only semiconductor memory (Intel invented both the DRAM and the EPROM, two memory technologies that are still going strong today). Thus became the Intel 4004, the first microprocessor (uP). The 4004 consisted of 2300 transistors and was clocked at 108 kHz (i.e., 108,000 times per second). Compare this to the 42 million transistors and the 2 GHz clock rate (i.e., 2,000,000,000 times per second) used in a Pentium 4. One of Intel's 4004 chips still functions aboard the Pioneer 10 spacecraft, which is now the man-made object farthest from the earth.